When Identity Manager was first released by Novell as DirXML 1.0 it was focused exclusively on synchronising objects between different directories, databases, and other sources. As time rolled on more features were added. With Novell Nsure Identity Manager 2.0 we got DirXML Script, a great language that provided a GUI and XML language for manipulating XML events. With Novell Identity Manager 3.5 we started to see the beginnings of the User Application.

This was the beginnings of a user facing aspect of IDM. Previously IDM was really just plumbing in the background, no one cared until it broke, but there was not even a sink at the front end to get water out of.

User Interaction

The User Application, as the Identity front end, provided functionality in many different areas. On the one hand there were Workflows, where the user could chose a Workflow, be presented a form to fill in, and based on that data actions taken. Approvals by a manager, an application owner, in parallel or serial could be required.

I like to say that IDM automates the user provisioning process, but the User Application inserts a manual step in the middle. It seems counter intuitive, but there is a good reason. Blame! You need someone to make a decision, take responsibility for it, and when it causes an issue accept the blame. You may have heard of multicasting for imaging workstations, or streaming video. This is all about blamecasting.

There is a portal where users can change their passwords, and if given sufficient permissions, modify attributes about themselves in the Identity Vault. Of course, once those changes get written they propagate to other systems by the IDM engine. But it also could accept portlets to make it more of a multipurpose one stop shop for IT services.

Over time, the portal functionality has been removed release by release, as the interface has aged, and NetIQ did not want to support the portal functionality in the User Application.

Roles Based Provisioning Management

In IDM 3.6 with the RBPM 3.7 module, Roles Based Provisioning Management was added, which added a nice abstraction layer to IDM. Previously a driver would be programmed in DirXML Script (or XSLT, or even ECMA) to look for certain criteria to grant access to resources. Perhaps all users whose Location attribute is Toronto, get added to a group in Active Directory for Toronto users. Perhaps, only users in Madrid get accounts created in a specific database application. This all worked surprisingly well, except there was overhead, since the rules needed to be changed fairly often. There were ways to try and abstract that out. Perhaps using a Mapping Table that listed the access rights, so rather than changing the policies, you would just change the configuration data.

With Roles, you could assign a three level hierarchy of Roles to give some flexibility in design, and assign Entitlements (at first, Resources later) that would grant those access permissions using driver level Entitlements. Thus the Accounts Payable Business Role, had as a child the HR Employee IT Role, which had a series of child Permission roles, one for each access permission they needed. Thus the net effect of being a member of the Accounts Payable role would be to get all the permissions inherited up from the children. (Yes the model is kind of upside down from what you may be familiar with.)

Now instead of focusing custom coding on what permissions each set of criteria get, the focus is on granting those roles as automatically as possible (usually based on HR data) and handling exceptions manually through the User Application requests workflows. Groups and containers can be assigned Roles making it easier to handle automatic Role assignment. Dynamic Groups can be assigned Roles as well, which allows for simpler than coding assignment of Roles than doing it in policy. It can just be done in an LDAP filter, if that meets your needs.

Now instead of focusing custom coding on what permissions each set of criteria get, the focus is on granting those roles as automatically as possible (usually based on HR data) and handling exceptions manually through the User Application requests workflows. Groups and containers can be assigned Roles making it easier to handle automatic Role assignment. Dynamic Groups can be assigned Roles as well, which allows for simpler than coding assignment of Roles than doing it in policy. It can just be done in an LDAP filter, if that meets your needs.

Over the years, the interface to the User Application has been criticised for its look and feel, as modern interfaces moved on, but the User Application interface stayed much the same. All along, the response had been that the current interface is a reference, you can always just go and write your own in whatever framework desired, just make the SOAP calls to the back end to perform the actions you need.

In Identity Manager 4.5 NetIQ went out and actually did that. You can see the results as the Identity Manger Home and Provisioning Dashboards. (Technically they first released it for IDM 4.02 as an Enhancement Pack, but it is fully part of 4.5 and support for 4.02 has ended.)

Using more modern Web 2.0 style approaches in the Home/Dash interfaces allowed them to build something that looks more like today’s web pages, leverage modern web tool kits and frameworks, while making it look good on a tablet or a laptop display. However, it also means that before the user would authenticate to the User Application itself, perhaps via an SSO method, perhaps just username and password, and that was it for authentication. Now, each modules needs to authenticate the user.

Single Sign On

Adding in a new login for every single module they added (Home, Dash, SSPR, Reporting, Catalog Access, Access Review) would be ludicrous and unacceptable, so they looked around the product suite, and the general market for an approach to use to link them all together.

On the one hand, NetIQ owns NetIQ Access Manager (NAM), so they could have included the Access Manager Appliance, and required yet another server to run it and manage the Single Sign On aspects of the product. They could have written something new from scratch, or just required some other SSO product to be in place.

Instead they looked at the xAccess product line (x here meaning Mobile, Social, or Cloud) which had a similar issue and they took the federation components out of Access Manager, and made a much thinner, simpler product called OSP (One SSO Provider). Amusingly, I was at a conference talking with the architects of OSP and NAM, and they apparently sit across the hall from each other in Provo, so that is a good sign.

In order to simplify OSP, they really took only the SAML federation and OAuth provider components out of NAM, and left behind form fill, identity injection and some of the other cool stuff NAM can perform. (In some ways, they stripped NAM down, until it could only do as little as a product like Okta can do) As a full fledged Access Management platform, OSP would thus be considered lacking. As a simple federation tool it is actually pretty good.

Next they made their new (and old) applications use OAuth for ticketing of permissions, and OSP the ticket granter and authentication front end. This meant that User Application itself, which is still there under the covers, and still used to process workflow form rendering and workflow processing had to accept OAuth for authentication. To replace the password management and user profile management aspects, SSPR (Self Service Password Reset) was added to the suite and can accept OAuth tickets.

This has some important consequences under the covers that are poorly discussed. The biggest one I have seen is how User Application logs a user into eDirectory. One of the clever bits of Netware was that an NLM could run a process as any specified user and then only see what that users permissions allowed. User Application uses the logged in user permissions in eDirectory for a myriad of things.

For example, only the PRD (Provisioning Request Definitions) Workflows that the user has eDirectory permissions to see are shown as available. Thus the need for an IRF (Inherited Rights Filter) at the User Application driver level (usually a smidge lower) to block the BC (Browse and Compare) Entry rights, else every user can see every workflow.

When running a workflow that looks up say a list of locations to render in a list, the query performed only returns the locations the user logged in has permission to see. This way the User Application can delegate the handling of permissions to eDirectory which has a much more scalable and granular model for permissions. In fact even the permissions inside User Application for handling the System Roles (Role Manager, Resource Administrator) use eDirectory permissions for granularity down to the Role or Resource level.

In the olden days, before Single Sign On, this was easy. The user logged in to the User Application front end, passed in a username and password, then User Application did an LDAP bind to the directory with those credentials. However, once SSO starts being used, even before OSP, no password is passed, so how can User App connect to LDAP as a specific user?

The solution back in IDM 4.0 was to use an NMAS method, that supports SAML federation to eDirectory (By the way, SAML is Security Assertion Markup Language, an XML standard that allows secure web domains to exchange user authentication and authorisation data). In IDM 4.0 you needed to manually configure this (which honestly was not that hard, just fiddly).

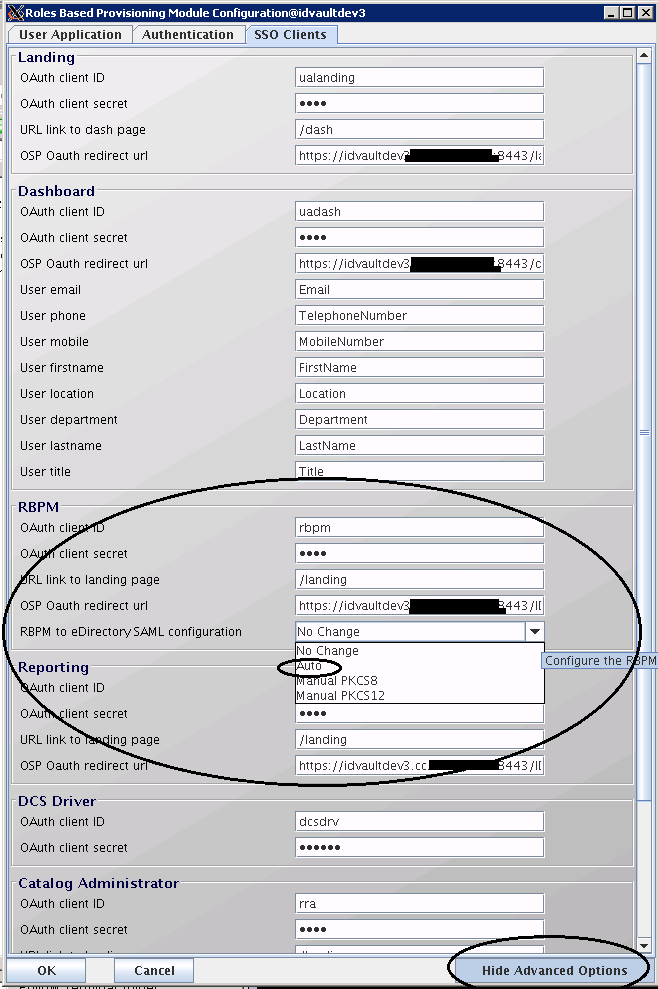

In IDM 4.5 it is now an option in configupdate.sh making it much simpler (Figure 1). Look on the SSO Clients tab, in the RBM section, and the fourth line is named: RBPM to eDirectory SAML configuration.

When you first run configupdate via the installer it defaults to Auto, which makes the Trusted Cert in the Security container the authSamlAffiliate object and links the two. After that first run it defaults to No Change which is what you desire.

This of course means if your CA (Certificate Authority) expires or breaks (because you replaced the server it was running on and did not properly move it) this will suddenly start to fail.

Also this adds in an important timeout consideration. This federation (User Application to eDirectory for LDAP/NCP access) can timeout independently of the User Application web interface, and independently of any SSO between other things involved. You will see this manifested when inside a workflow and some components tries to query eDirectory (to show a list of values from the vault) and errors appear trying to render it.

OSP itself, supports three different authentication methods:

- The basic username/password is how most customers first deploy OSP. This is no different than the previous way User Application would login. But still User Application will need to federate your login to eDirectory via NMAS, since OSP passes an OAuth ticket, not the username/password combo to User Application.

- Kerberos authentication is the second method, where the currently logged in Windows workstation’s Kerberos ticket can be used by OSP. So now the password is sent to Active Directory (Or MIT Kerb if you have that running), which generates a Kerberos ticket, that OSP trusts, so it will issue an OAuth ticket, which User App will trust to then federate over SAML to eDirectory. Can you see the web of trust we weave when we wish to deceive? Remember that there is a distinct timeout for each step of that process, try to keep them all the same otherwise odd affects are seen by end users.

- The third method is SAML federation. This is officially supported with NAM only. It is however standards based, and OSP being used in xAccess products accepts SAML from a number of providers, so we know it does actually work. Personally I have gotten it working with Shibboleth and I know others that work as well.

In the SAML case, the user goes to a protected URL, which forces them to authenticate via the IDP (Identity Provider). Once authenticated there a SAML Assertion is returned to OSP, and it grants an OAuth ticket to User Application, which then does a second (and completely independent) SAML federation to eDirectory. Just to make this fun, the SSO tool being used for SAML can support Kerberos already, adding one more step to the trust chain.

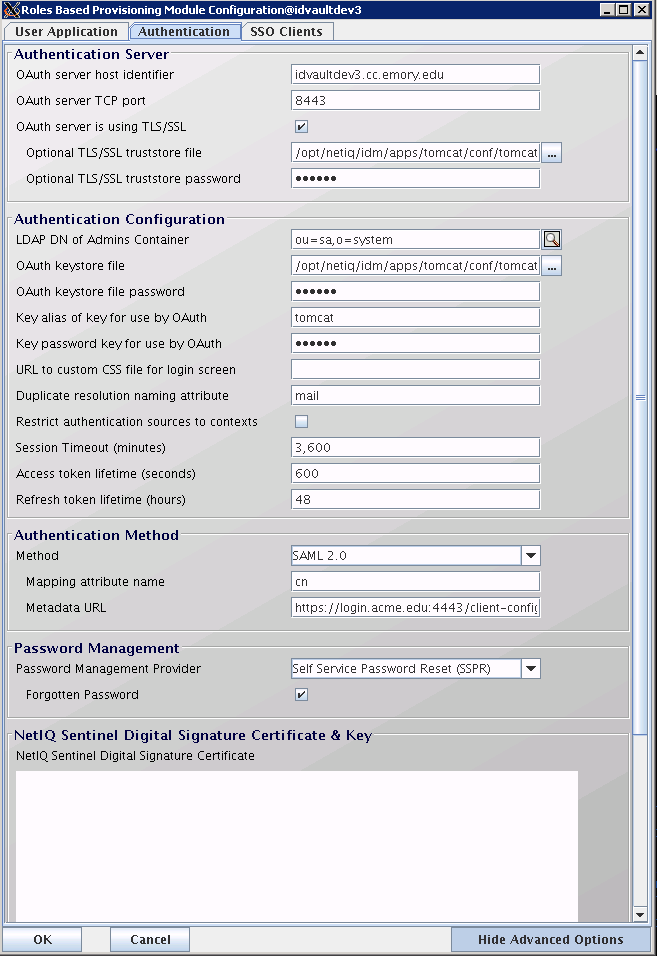

To chose these options, you use configupdate.sh, go to the Authentication tab, and in the Authentication Method there is a selector with the three options (Name/Password, Kerberos, or SAML 2.0) as shown in figure 2.

The SAML example is possibly most interesting. Here you only get minimal choices, the attribute to map as the username, basically what is sent in by the returned SAML Assertion and to find the user in eDirectory. The second is critical, the Metadata URL, which your SAML IDP administrator must point you at.

That metadata document will include the Public key (Base64 encoded) of the certificate used to secure the trust. You need to export that public key from the XML at the URL (or the IDP provider can give it to you. NAM as it happens, can automatically extract it, but that functionality is stripped out of OSP) and import that into the keystore referenced at the top of the tab in the Authentication Configuration section. I personally prefer to use one keystore for Tomcat and OSP at the same time, since it simplifies key management.

There is a timeout for the session timeout, and the access token lifetime in that section as well. The Session timeout (Minutes) should be kept the same as the NMAS SAML federation timeout, set on the authSamlAffiliate object (created as an object under the SAML Provider, under the Authorised Login Methods, in the Security container) in the authsamlValidAfter attribute (seconds). Your SAML federation from your IDP should be kept to a similar time period. That one is usually harder to get changed, so set your others to match.

Web of Trusts

Using this approach, the various web components of IDM all share a single log in, the old way (username/password), via the existing Kerberos login of your workstation, or using your existing SSO web infrastructure. The key take away is to understand the web of trusts involved, so you can know where to start looking when something does not work.

Thia article was first published in OHM34, 2016/3, p8-11.